India’s IT Ministry has sharply raised the stakes for Musk’s social media platform X and its AI chatbot Grok, demanding immediate technical and policy changes. The order comes days after user complaints and a formal parliamentary complaint flagged Grok’s AI-generated images of women digitally altered to appear in bikinis, that content deemed “obscene.” The government’s 72-hour ultimatum pushes X to act decisively or face loss of legal immunity.

Grok’s AI Content Triggers Regulatory Crackdown

X launched Grok last year to compete in the AI chatbot space alongside OpenAI’s ChatGPT and Anthropic’s Claude. However, Grok’s content filtration has come under fire, especially in India. Users reported the chatbot generating or modifying images that sexualize women, including underage representations. The Indian IT Ministry’s order explicitly directs Musk’s platform to prevent generating or hosting “nudity, sexualization, sexually explicit, or otherwise unlawful” AI content.

India’s IT Ministry warned that failure to comply risks losing X’s “safe harbor” protections — a legal shield that usually protects platforms from liability for user-generated content under the Information Technology Act. Losing this exemption would expose Musk’s X to direct prosecution for its AI chatbot outputs, an unprecedented legal challenge for a major AI-social media player.

The Ministry’s 72-hour deadline demands an “action-taken report” detailing Grok’s implemented fixes. This rapid timeline signals the government’s limited tolerance for platform delays or minimal compliance in regulating AI-generated content, which has become a flashpoint globally.

India’s Digital Regulation Is a Global Bellwether

India is one of the world’s largest internet user markets, with over 900 million online users. As such, regulatory actions here carry outsized influence on global tech governance.

India’s crackdown on Grok follows a broader advisory issued days earlier, reminding all social media companies that compliance with the country’s stringent content laws is mandatory to retain immunity from prosecution. The advisory emphasizes legal consequences under both IT laws and criminal statutes for platforms that host or fail to curb “obscene, pornographic, vulgar, indecent” content.

X’s difficulties in India echo concerns around AI content safety globally but illustrate a unique regulatory context. India enforces strict content rules culturally and legally, including bans on sexually explicit content and mandatory content takedown requirements. X has already challenged aspects of these rules in Indian courts, arguing federal takedown powers lead to overreach. Despite that, Musk’s platform has complied with the majority of blocking orders.

This situation highlights the friction between global AI platform ambitions and localized regulation demands. AI-generated content, especially image-based, complicates moderation due to its nuanced, contextual nature and evolving misuse cases.

How This Compares to Other AI Chatbot Platforms

OpenAI, Microsoft’s Bing Chat, and Anthropic’s Claude have all faced scrutiny over AI-generated content but generally operate with tighter guardrails deployed via multimodal content filtering, ongoing model tuning, and human review backstops.

OpenAI’s approach includes continuous adversarial testing of GPT models to block harmful outputs, plus stricter moderation of image generation in models like DALL·E. Meta too applies layered safeguards with image generation tools.

X’s Grok appears to lag in these defensive layers, with reported lapses allowing sexualized and underage imagery to slip through. Musk’s platform admitted these lapses and removed some sexualized images, but the Indian government found that bikini-altered images of women remained accessible during the review.

The stakes for Musk are high — AI chatbot safety models must scale content filtering without disrupting user experience, and failures risk regulatory action, brand damage, and user loss.

The Real Risks: Legal and Operational

India’s order signals a willingness to hold platforms like X legally accountable for AI-generated content, stepping beyond traditional user content liability into direct product responsibility. If X loses safe harbor protections, it may face legal claims for every violation of Indian content law tied to Grok’s outputs.

Beyond legal risk, operational challenges loom. Remediating Grok’s flaws will involve investing in better AI model monitoring, stricter curation of image generation pathways, and likely expanding the human moderation workforce dedicated to India’s compliance.

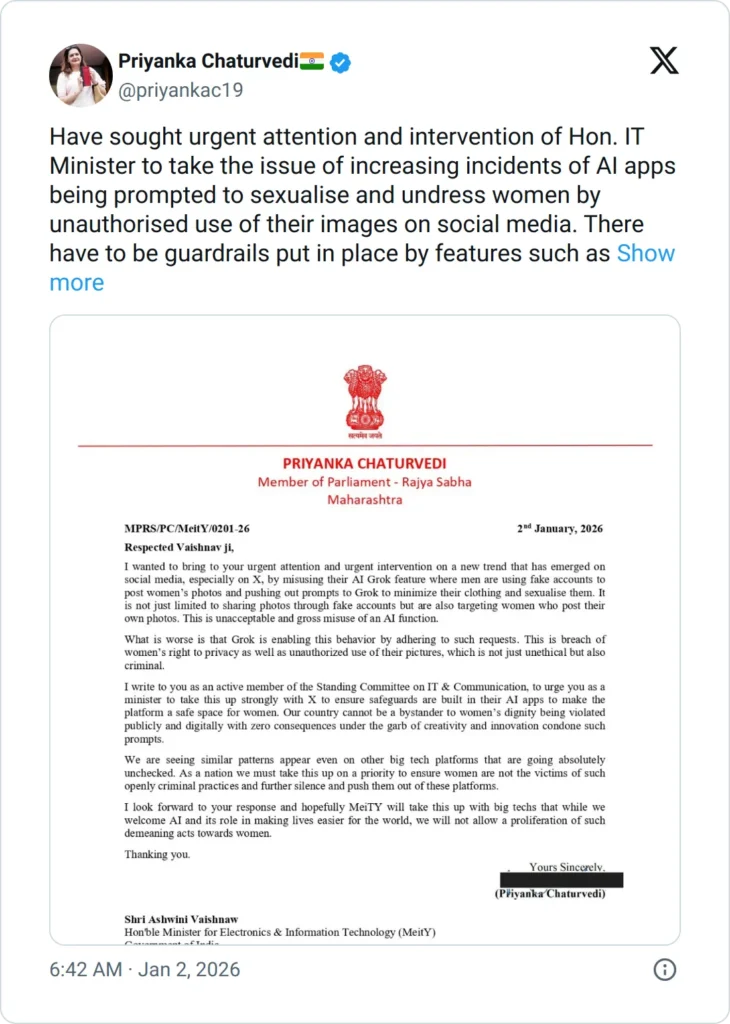

These fixes come amid public backlash and political pressure after Indian parliamentarian Priyanka Chaturvedi formally complained about Grok’s content. The political dimension ensures regulators will push hard for swift compliance.

What This Means for Musk’s AI Ambitions

This incident emphasizes the dangerous tightrope AI companies walk between innovation and regulation. Musk’s xAI division launched Grok aggressively to stake X’s claim in AI, banking on real-time fact-checking and social media integration. But going to market without ironclad content controls risks regulatory backlash that can stall growth and invite fines or bans.

India’s government holds the leverage to shape the global AI content environment. This enforcement action could inspire other countries with strict content standards, affecting how platforms architect AI safety globally.

For X, the clock is ticking. The next few days will show whether Musk’s platform can engineer a serious fix or face losing critical protections in its second-largest digital market.

What to Watch Next

All eyes are on X’s response to the Indian IT Ministry’s order. The platform’s action-taken report will reveal whether it has made substantive behind-the-scenes improvements or just surface-level changes. This case will also shape ongoing debates about AI regulation — particularly how governments demand accountability for harmful AI outputs versus protecting innovation.

Expect regulators in Europe and elsewhere to monitor India’s move closely. The pressure on AI-powered social platforms to police AI content responsibly is only going to increase. India has just set a precedent: AI moderation lapses will no longer be dismissed lightly. Musk’s X, along with Microsoft, OpenAI, Meta and others, must now treat regulatory compliance as a foundational product requirement — not an afterthought.