The AI world didn’t just heat up in November 2025, its ignited. In the span of six days, OpenAI dropped GPT-5.1, Anthropic released Sonnet 4.5, and then Google arrived with a knockout punch:

Gemini 3 is the first AI system in history to cross 1500 Elo on LMArena.

Hashtechwave

Not an incremental bump. Not a gentle upgrade. A violent leap forward that pushed Google from “catching up” to “leading the frontier.” And with features like Vibe Coding, Google Antigravity and DeepThink. Gemini 3 aims to reshape how we use AI and also how we build software, run companies and interact with computers. This is the definitive deep-dive into Gemini 3, crafted for developers, CTOs, tech enthusiasts and even investors who want more than hype, they want clarity, analysis and context.

What Is Gemini 3? Google’s Frontier Multimodal Breakthrough

Gemini 3 is the Google’s newest frontier-scale multimodal model, launched globally on November 18–19, 2025. Built upon the Gemini 2.5 Pro architecture, it brings major leaps across reasoning, multimodal understanding, coding, visual intelligence and agentic capabilities.

According to Google’s internal data and independent benchmarkers:

- It scored 1501 Elo on LMArena, the highest ever recorded.

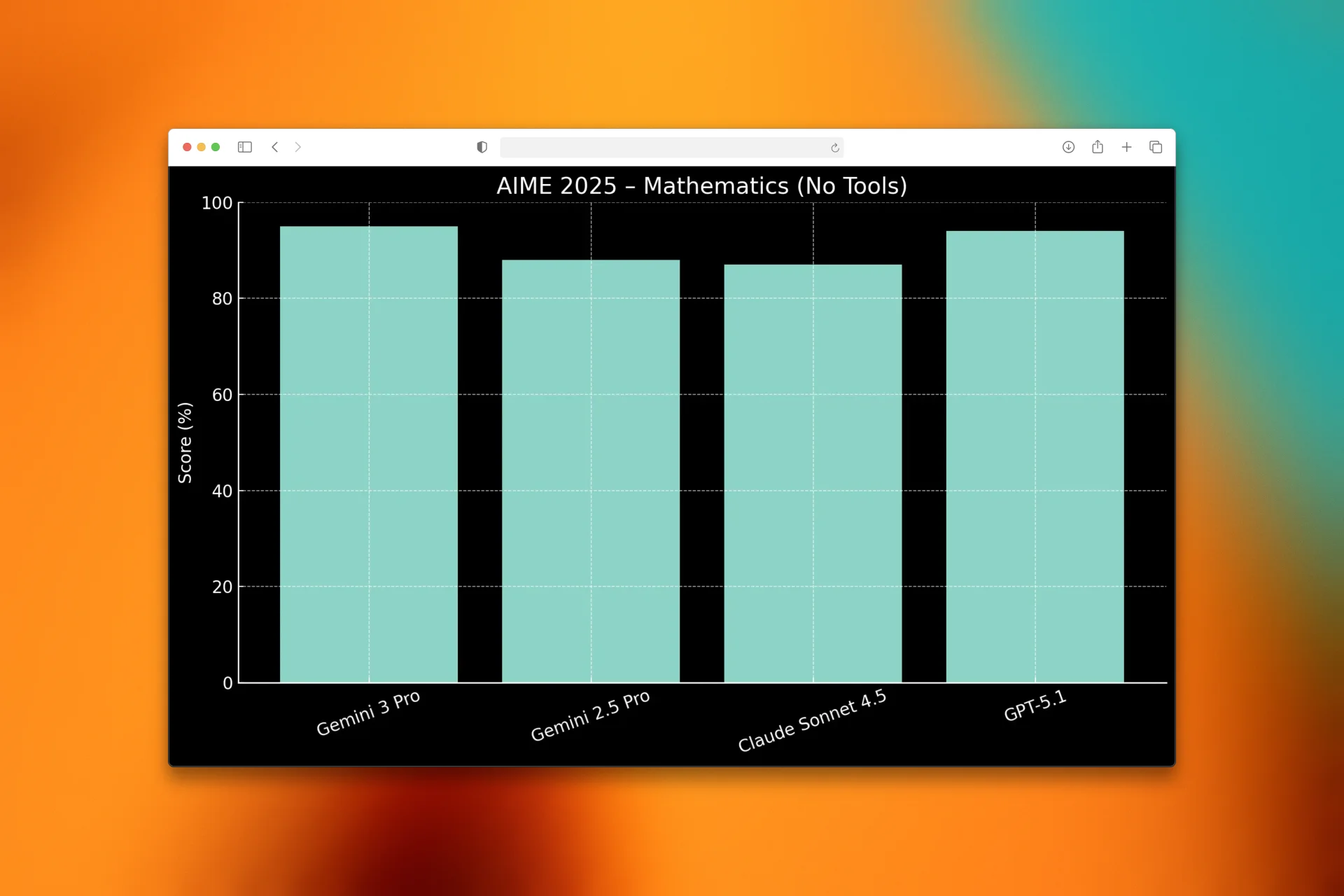

- It achieved perfect 100% on AIME 2025 mathematics.

- It doubled or tripled competitor scores on vision & screenshot reasoning.

- It reached 81% on MMMU-Pro and 87.6% on Video-MMMU, redefining multimodal AI.

- It now powers Google Search’s AI Mode, Workspace, the Gemini App, Vertex AI and Android Studio.

We can say that this isn’t a single model but a new foundation for the entire AI ecosystem.

Inside the Architecture: What Makes Gemini 3 Different?

Compared to Gemini 2.5 Pro, this generation introduces:

1. Enhanced Long-Horizon Reasoning

Gemini 3 sustains multi-step thought processes far more reliably, crucial for enterprise workflows, engineering tasks and agentic execution.

2. Multimodal Fusion at the Core

- Not added-on modalities.

- Not bolted-together encoders.

This latest Google LLM model can processes text, images, video, audio and code as a single fused reasoning system, enabling:

3. Tool-Use & Planning Improvements

The model can break tasks into subtasks, plan execution, use tools and verify its own steps. On the 12-bench agentic test, G3 Pro scored 85.4%, surpassing Gemini 2.5 Pro’s 54.9%.

4. A Smarter Conversational Style

The model’s tone changed. It is more direct, less flattering and more analytical. Google intentionally designed Gemini 3 to:

Tell you what you need to hear, not what you want to hear.

This is closer to a research assistant than a generic chatbot.

The Vibe Coding Revolution

One of Gemini 3’s hallmark features is what Google calls Vibe Coding, a radical leap in how developers build software. Imagine describing an app like this:

I want a clean, minimalist finance tracker with pastel colors, daily insights, and a playful onboarding animation.

And instead of returning code snippets, Gemini 3 generates: Full UI Design, Backend APIs, Animations, Theming, State management with Responsive layouts. In other words: No syntax. No boilerplate. No debugging the scaffolding. Just intent and a working software.

Google claims that latest Gemini model dominates across coding environments by topping:

- WebDev Arena

- Design Arena

- LiveCodeBench Pro (where it jumped from 1,775 to 2,439)

This matches independent benchmarks confirming exceptional performance in Web Apps, Game development, 3D designs and UI components. These stats clearly states that vibe coding is not just autocomplete rather it’s semantic software creation.

Google Antigravity — The New Agentic Development Platform

Google quietly introduced a second breakthrough alongside Gemini 3:

Google Antigravity

A brand-new agentic platform designed for multistep development and execution.

- Google’s answer to OpenAI’s Assistants API

- Google’s response to Anthropic’s Claude Agents

- A next-gen agent platform built directly into Gemini’s core

What Antigravity Allows

- Multi-step autonomous workflows

- Code planning + execution

- Interface generation

- Long-horizon tool usage

- Automated debugging

- Building apps from scratch

In benchmarks like Vending-Bench 2, measuring long-term strategy:

- Gemini 3 scored $5,478.16

- Claude Sonnet 4.5 scored $3,838.74

- GPT-5.1 scored $1,473.43

This gap reflects a crucial reality:

Agentic AI is becoming the next competitive battleground, and Google is leading it.

Benchmark Battle: Gemini 3 vs GPT-5.1 vs Claude 4.5

| Benchmark / Feature | Gemini 3 Pro | GPT-5.1 | Claude Sonnet 4.5 |

|---|---|---|---|

| LMArena Elo | 1501 | 1470s | 1440s |

| HLE (w/o tools) | 37.5% | ~22% | 13.7% |

| HLE (DeepThink) | 41.0% | N/A | N/A |

| AIME 2025 | 100% | 94% | 100% |

| MathArena Apex | 23.4% | 16–18% | ~20% |

| ARC-AGI-2 | 31.1% | 17.6% | 13.6% |

| ARC-AGI-2 (DeepThink) | 45.1% | N/A | N/A |

| ScreenSpot-Pro | 72.7% | ~4% | ~36% |

| MMMU-Pro | 81% | ~70% | ~72% |

| Video-MMMU | 87.6% | ~76% | ~78% |

| WebDev Arena Elo | 1487 | 1320–1380 | ~1400 |

| Vending-Bench 2 | $5,478 | $1,473 | $3,838 |

| Agentic Score | 85.4% | ~55% | ~62% |

My Verdict: Gemini 3 dominates the 2025 benchmark landscape, particularly in:

GPT-5.1 remains strong in creativity and writing. Claude Sonnet 4.5 remains strong in analysis and summarization. But Gemini leads in raw intelligence and agentic capability.

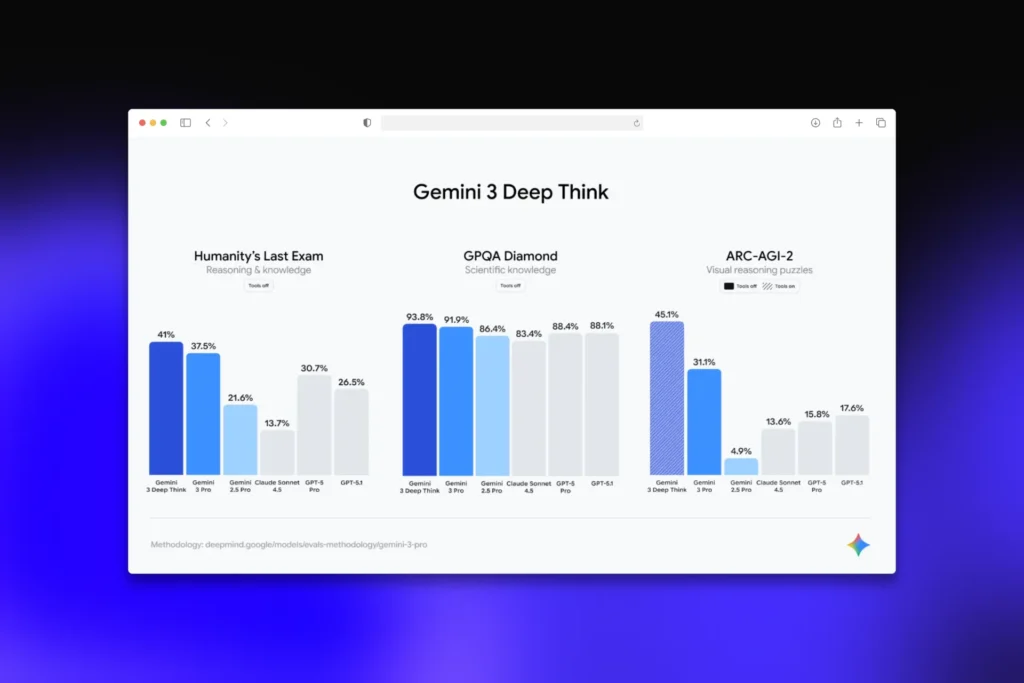

Gemini 3 DeepThink — Google’s High-Performance Reasoning Mode

If Gemini 3 Pro is powerful, DeepThink is monstrous. DeepThink is Google’s “slow-thinking” mode that is similar to giving the model extra time, extra memory and extra reasoning layers to ensure the highest accuracy.

DeepThink Achieves:

- 41% HLE (no tools)

- 93.8% GPQA Diamond

- 45.1% ARC-AGI-2

These are historic numbers. DeepThink is not just for math problems, it’s for:

- Investment analysis

- Scientific research

- Complex debugging

- Legal reasoning

- Architecture planning

- Multi-agent orchestration

- Enterprise decision-making

At launch, DeepThink is rolling out to Google AI Ultra subscribers first, with API access expected soon afterward.

Gemini 3 x Google Search — AI Mode Arrives

Gemini 3 is deeply integrated into Google Search, not as a sidebar or a lab feature but as a core search engine layer.

What AI Mode Does

- Handles complex reasoning queries like Booking

- Processes multimodal inputs (text + image + video)

- Generates structured, browsable answers

- Solves multi-step research tasks

- Provides citations from across the web

This changes SEO forever:

- AI Overviews will rely on Gemini 3 for complex queries.

- News, product reviews, and long-tail queries shift from link-driven to answer-driven.

- Structured data and E-E-A-T matter more than ever.

- Image SEO becomes critical since Gemini 3 now “reads” visual content at AGI-adjacent levels

Also Read: Why “Google AI Mode” Matters More for Search

Gemini in Google Workspace: The Productivity Upgrade of the Decade

| Gemini in Gmail | Multi-email summarization Automated follow-ups Sales pipeline triage Inbox command execution |

| Gemini in Docs | Research assistant mode DeepThink analysis for complex documents Code and UI generation directly inside Docs |

| Gemini in Sheets | Forecasting & modeling Data visualization Custom dashboards generated from plain language |

| Gemini in Slides | AI storyboard creation Theme generation matching brand guidelines |

| Gemini for Meetings | Real-time summaries Multimodal transcription Stakeholder action plans |

Gemini 3 in Android Studio — The Future of App Development

Android developers get:

- Vibe-coded app generation

- Real-time debugging

- UI/UX prototyping from a single sentence

- Antigravity agents to refactor, test, and package builds

This is the closest the industry has come to:

Describe the app. Ship the app.

Gemini 3 vs GPT-5.1 — Who Wins in 2025?

OpenAI has held the lead for years. But in late 2025, the narrative shifted.

| Gemini 3 | GPT-5.1 | Claude Sonnet 4.5 |

|---|---|---|

| – Benchmark accuracy – Visual reasoning – Screenshot processing – Agents & long-horizon tasks – Web development & UI generation – Multimodal integration – Vibe Coding – Android & Google ecosystem power | – Creativity & writing – Fiction & narrative generation – Conversational personality – Third-party developer ecosystem maturity | – Clean reasoning – Safe outputs – Document-heavy tasks |

The Future of Agentic AI and Why Google G3 Matters

Gemini 3 signals a shift. We are moving from:

- Chatbots → Coworkers Agents that plan, execute, verify, and adapt.

- Static UIs → Generative UIs Software that designs itself for each task.

- Language Models → Autonomous Systems AI that acts, not just answers.

The companies that integrate with Gemini early will see faster development cycles, lower engineering costs, more automation and competitive advantages in product velocity. The companies that resist will fall behind.

What is Gemini 3?

Gemini 3 is Google’s 2025 flagship AI model featuring advanced multimodal reasoning, agentic capabilities, Vibe Coding, and a new DeepThink reasoning mode.

What is Vibe Coding in Gemini 3?

Vibe Coding lets developers describe software in natural language (“the vibe”), and Gemini 3 generates full codebases, UIs, and workflows automatically.

When was Gemini 3 DeepThink released?

DeepThink is rolling out from November 19, December 2025, with initial access for Gemini Ultra subscribers.

How does Gemini 3 compare to GPT-5.1?

Gemini 3 surpasses GPT in reasoning, vision, multimodal tasks, agentic workflows, and coding where GPT 5.1 still leads in creativity and writing.

Is Gemini 3 available through API?

Yes, via Google AI Studio, Vertex AI, the Gemini API and the Gemini CLI.