OpenAI CEO Sam Altman expressed significant concern that social media platforms are losing authenticity due to the proliferation of AI-driven bots. In a series of posts on X (formerly Twitter) Monday, Altman pointed to Reddit discussions where even content supporting his own company felt artificially generated. This highlights a critical challenge to online trust and the integrity of digital conversations.

The Blurring Line Between Human and Machine

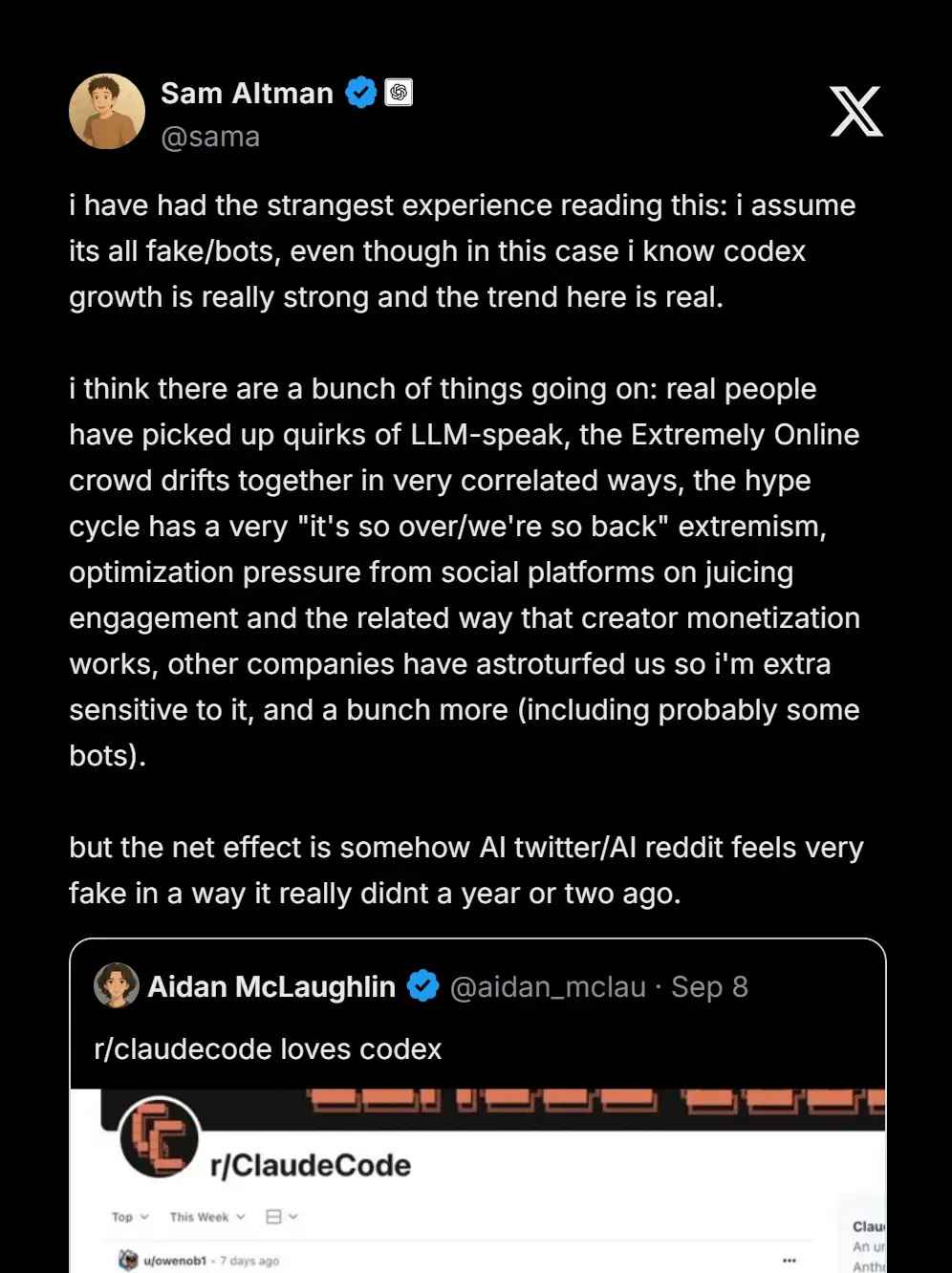

Sam Altman, a prominent figure in the AI industry and who’s also a shareholder in Reddit, recently highlighted the growing difficulty in distinguishing between genuine human interaction and automated bot activity online. His concerns stemmed from observing threads in AI-focused subreddits, such as r/Claudecode, where discussions around tools like OpenAI Codex felt suspiciously artificial. “I assume it’s all fake/bots,” Altman stated, expressing his skepticism even towards posts that appeared to praise his company’s products.

Altman attributed this phenomenon to several factors:

- AI-Influenced Language: Humans are beginning to adopt the linguistic patterns of AI models.

- Algorithmic Behavior: Platform incentives and hype cycles encourage uniform, coalesced community behaviors.

- Potential Astroturfing: The possibility of coordinated campaigns by third parties to artificially shape public opinion.

He specifically noted that OpenAI itself has been a target of “astroturfing,” where third-party actors post supportive content that may not be genuine. This erosion of trust is compounded by data suggesting that over half of all internet traffic is now generated by bots, a figure significantly boosted by the rise of Large Language Models (LLMs).

May Also Like: DeepSeek vs OpenAI: Should You Switch? All Untold Facts

Why This Warning Matters for the Future of the Internet

Altman’s warning is not just a casual observation, it’s a critical commentary on the core of our digital society. As AI models, many of which are trained on vast datasets from platforms like Reddit, become more sophisticated at mimicking human expression, the very foundation of online authenticity is at risk.

The key implications include:

- Erosion of Trust: If users cannot trust that they are interacting with real people, the value of social media as a space for genuine connection and discussion diminishes.

- Information Integrity: The line between organic conversation and AI-driven manipulation becomes dangerously blurred, increasing the risk of widespread misinformation campaigns.

- The Future of Platforms: These comments are fueling speculation that OpenAI might venture into creating its own social platform, although Altman’s points suggest such a platform would face the same challenges of preventing AI-driven echo chambers.

As platforms and regulators grapple with this new reality, Altman’s critique from the heart of the AI industry emphasizes the urgent need for new solutions to verify authenticity and preserve the integrity of our online world.

Also See: Amazon-backed AI Startup plans to recreate Welles’ “Ambersons”